# Using TensorFlow 1.13.1

import tensorflow as tf

import tensorflow.keras as keras

import numpy as np

import os

import matplotlib.pyplot as plt

from datetime import datetime

# Add tensorflow.keras imports

from keras import models

from keras import layers

from keras.utils import to_categorical

from keras.datasets import mnist

print("TensorFlow:", tf.__version__, " Keras:", keras.__version__)

MNIST Digits Dataset¶

# Read Keras MNIST dataset

(train_images, train_labels), (test_images, test_labels) = mnist.load_data()

print("train_images.shape:", train_images.shape, "train_labels len:", len(train_labels))

# First three labels

print("MNIST First four labels:\n", train_labels[:4])

# First digit

print("MNIST First digit (partial crop):\n", train_images[0,4:26,6:24])

Preparing MNIST Digits for Input to MLP¶

Convert MNIST images for perceptron input

- from 2-D arrays of 28 x 28 pixels,

- to 1-D vector of 784 (28*28) numbers, scaled to range [0, 1].

# MNIST images as normalized (_, 28*28) tensors for MLP

train_images_mlp = train_images.reshape((60000, 28 * 28))

train_images_mlp = train_images_mlp.astype('float32') / 255

test_images_mlp = test_images.reshape((10000, 28 * 28))

test_images_mlp = test_images_mlp.astype('float32') / 255

# First digit

(shape0, mean0) = (train_images_mlp[0].shape[0], np.mean(train_images_mlp[0]))

(min0, max0) = (np.min(train_images_mlp[0]), np.max(train_images_mlp[0]))

print("MNIST First digit shape:", shape0, "mean:", mean0, "- min, max:", min0, max0)

# Convert MNIST labels [0-9] to categorical

train_labels_cat = to_categorical(train_labels)

test_labels_cat = to_categorical(test_labels)

# First three labels

print("MNIST First three labels:\n", train_labels[:4])

print("First three labels categorical:\n", train_labels_cat[:4])

# Define MLP: one hidden layer of 256 nodes - 10-class softmax output

mlp = models.Sequential()

mlp.add(layers.Dense(256, activation='relu', input_shape=(28 * 28,)))

mlp.add(layers.Dense(10, activation='softmax'))

mlp.compile(optimizer='rmsprop', loss='categorical_crossentropy', metrics=['accuracy'])

# Summarize MLP

mlp.summary()

Train Multi-Layer Perceptron on MNIST¶

- Use Keras

mlp.fit(train_inputs, train_outputs)

# Train MLP; using validation_split = 0.2

history = mlp.fit(train_images_mlp, train_labels_cat,

epochs=10, batch_size=128, validation_split=0.2)

# Visualize MLP accuracy, loss on MNIST training and validation data

# Define acc, loss plot function

def plot_acc_loss(history):

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

accuracy_template = "Epochs: {}, Best training acc: {}, Best val_acc: {}"

print(accuracy_template.format(len(epochs), np.max(acc), np.max(val_acc)))

plt.figure(figsize=(18,5))

plt.subplot(121)

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.legend()

plt.subplot(122)

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.legend()

plt.show()

return None

plot_acc_loss(history)

Evaluate Multi-Layer Perceptron on MNIST¶

- Use Keras

mlp.evaluate(test_inputs, test_outputs)

# Evaluate MLP classifier

test_loss, test_acc = mlp.evaluate(test_images_mlp, test_labels_cat)

print('MLP Test accuracy:', test_acc, '- MLP Test loss:', test_loss)

CNN Elements: Filters, Layers, Strides and Padding¶

Figure

Convolutional Neural Network MNIST Classifier (CNN)¶

- CNN (~98% with one hidden layer) - skip

- CNN (~99.2% with three hidden layers, total trainable params: 130,890)

- CNN Test accuracy: 0.9919

Contrast CNN trainable parameters and accuracy (~99.2%, trainable params: 130,890) to single-layer MLP (~98% with one hidden layer, 256 nodes; total trainable params: 203,530).

# Keras CNN MNIST classifier - Three-Layer Convolutional Neural Network

# CNN input (28, 28, 1) images - CNN output (3, 3, 64) tensor

cnn = models.Sequential()

cnn.add(layers.Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)))

cnn.add(layers.MaxPooling2D((2, 2)))

cnn.add(layers.Conv2D(64, (3, 3), activation='relu'))

cnn.add(layers.MaxPooling2D((2, 2)))

cnn.add(layers.Conv2D(64, (3, 3), activation='relu'))

# Fully-connected (MLP) output classifier - 10-class softmax output

cnn.add(layers.Flatten())

cnn.add(layers.Dense(128, activation='relu'))

cnn.add(layers.Dense(10, activation='softmax'))

cnn.compile(optimizer='rmsprop', loss='categorical_crossentropy', metrics=['accuracy'])

# Model summary

cnn.summary()

Preparing MNIST Digits for Input to CNN¶

Convert MNIST images for CNN input

- from 2-D array of 28 x 28 pixels,

- to 3-D tensor of shape (28, 28, 1), scaled to range [0, 1].

# MNIST images as (_, 28, 28, 1) tensors for CNN input

train_images_cnn = train_images.reshape((60000, 28, 28, 1))

train_images_cnn = train_images_cnn.astype('float32') / 255

test_images_cnn = test_images.reshape((10000, 28, 28, 1))

test_images_cnn = test_images_cnn.astype('float32') / 255

print("train_images_cnn.shape:", train_images_cnn.shape)

print("train_labels_cat len:", len(train_labels_cat))

Train CNN with MLP output classifier¶

# Train CNN with MLP output classifier

history = cnn.fit(train_images_cnn, train_labels_cat,

epochs=10, batch_size=64, validation_split=0.1)

# Plot acc, loss

plot_acc_loss(history)

Evaluate CNN classifier¶

# Evaluate CNN classifier

test_loss, test_acc = cnn.evaluate(test_images_cnn, test_labels_cat)

print('CNN Test accuracy:', test_acc, 'CNN Test loss:', test_loss)

MNIST with Traditional Machine Learning¶

Multinomial MNIST Classifiers¶

Homework: Naive Bayes (NB), Stochastic Gradient Descent (SGD)¶

GaussianNB, SGDClassifier from Scikit-Learn

- e.g., sklearn.GaussianNB, sklearn.SGDClassifier (MNIST accuracy of ~85%)

Feature engineering¶

Feature engineering is important, more so for shallow MLP and traditional statistical classifiers.

- Input scaling (e.g., sklearn.StandardScaler on MNIST improves accuracy to ~90%)

- Deep networks beat hand-engineered features, with optimal, automatically learned features of, for example, CNN filters

Capsules Neural Network MNIST Classifier (CapsNet)¶

- Capsule Networks (CapsNet, Sabour, X, Hinton, 2017; Zhen, KPU, 2019) - skip

MNIST State-of-the-Art (SOTA)¶

MNIST Test accuracy stands at 99.83% (in 2019)¶

Record (03/2019)¶

- Zhao et al., 2019, report absolute MNIST error rate reduction from previous best 0.21% (99.79% accuracy) to 0.17% (99.83% accuracy).

- Capsule Networks with Max-Min Normalization, Zhen Zhao, Ashley Kleinhans, Gursharan Sandhu, Ishan Patel, K. P. Unnikrishnan, 2019, https://arxiv.org/abs/1903.09662.

Others (2017 - 2018)¶

Regularization of neural networks using dropconnect, Li Wan, Matthew D Zeiler, Sixin Zhang, Yann LeCun, and Rob Fergus, ICML 2013. 99.79% accuracy (0.39% error rate; 0.21% with ensembling)

Dynamic Routing Between Capsules, Sara Sabour, Nicholas Frosst, Geoffrey E Hinton, 2017 (https://arxiv.org/abs/1710.09829) 99.75% accuracy (0.25% error rate)

Simple 3-layer CNN above (total params, 130,890) without any training regularization:

acc: 99.15%

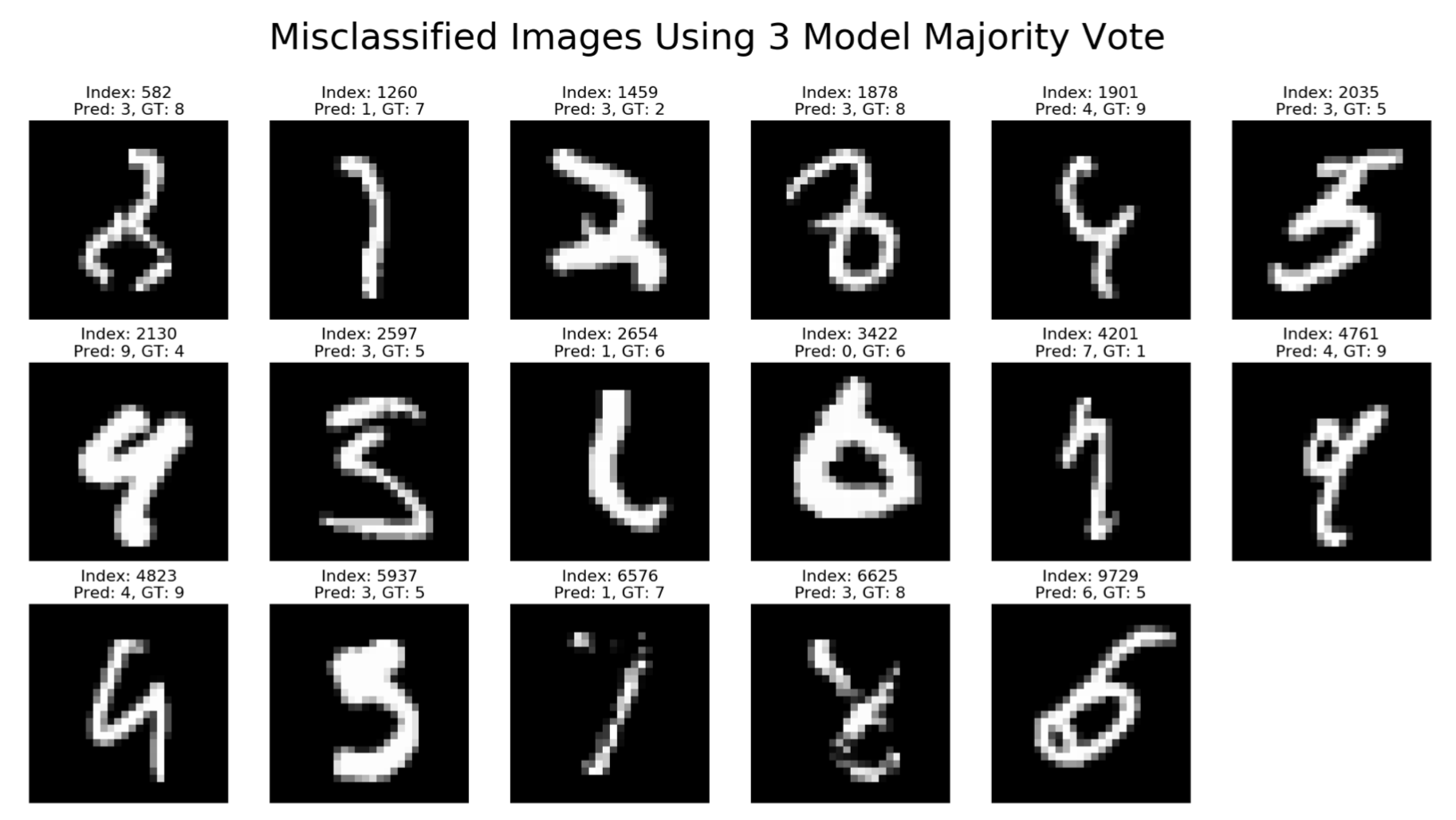

CapsNet with Max-Min: MNIST Test set errors¶

Misclassified MNIST images using 3-model majority vote from CapsNets trained using Max-Min normalization

- Total of 17 digit errors in 10,000 digit test set (99,83% test accuracy)

- MNIST digit index 6576 - Ground Truth: "7"

- current deep networks recognize as "1"; no human makes such error

Source: Capsule Networks with Max-Min Normalization, Zhao et al., 2019

After 30 years, MNIST Test must be considered a validation set¶

... it is no longer a test set.¶

MNIST Test can no longer be considered a proper Test set. It is a digit classification Validation set.

The MNIST dataset availability and focus on the MNIST task since 1989 have made the MNIST, 10,000 digit "Test" set into a "validation" dataset.

- New test sets for MNIST, different from the original 1990 one, are needed!

- A new digit recognition benchmark is needed.

MNIST improvements are near the end (only 20 digits left for improvement)¶

The MNIST Test set has a total of 60,000 training and 10,000 test digits.

Zhao et al., 2019, use single classifiers with recognition error on only 20 to 23 digits of the 10,000 MNIST test digits.